What AI Systems Actually Read: Writing Discussions That Get Cited

Here's something wild: as of mid-2024, around 60% of Google searches end without a click as AI delivers instant results. People aren't clicking through to websites anymore - they're getting answers from ChatGPT, Perplexity, and Google's AI Overviews.

But here's the good news: the discussions and answers that AI systems love to cite are the exact same ones your community members bookmark and say "this saved my day." You don't need to transform your community into a soulless documentation repository. You just need to understand what makes content easy for AI systems to parse, extract, and reference.

For this post in the GEO Action Series, we're going to learn about the anatomy of AI-citable discussions and how to pull them naturally into your community.

Forums Have a Natural Advantage in the Age of AI

Before we dive into specifics, understand this: if you have a community, you're 100% playing with a stacked deck.

AI systems are trained on forum content. ChatGPT, Claude, and other large language models learned by reading millions of Stack Overflow threads, Reddit discussions, and community conversations. The question → answer → refinement pattern isn't something you need to create - it's already baked into how most communities naturally operate.

Community discussions also capture something technical knowledge bases can't: the diagnostic journey. When someone posts (Author Note: I'm leaning into Star Trek technobabble hard for my examples in this post): "My warp core is showing 1.7 terajoule fluctuations," and then the community works through troubleshooting - checking this, ruling out that, eventually discovering it's a fractured dilithium matrix - that entire conversation is valuable.

AI systems don't just want the final answer; they want the context of how you get to the answer.

Plus, unlike static documentation that can get outdated, community threads evolve. If someone adds "Still works in 2025" or "With the new replicator firmware, you don't need this step anymore." This living, breathing quality signals to AI systems that information is current and maintained.

The Anatomy of an AI-Citable Discussion

Let's break down what makes AI systems think "yes, this is worth citing" by looking at actual discussion patterns.

The Complete Diagnostic Thread

What it looks like:

Thread title: "Transporter shimmer during materialization - Danube-class runabout"

Original post:

We're getting a noticeable shimmer effect during transport, and the cycle time has increased from 4.5 to 6.2 seconds. Running Transporter OS 7.3, pattern buffers show 94% integrity on diagnostics. Already tried recalibrating the phase transition coils - no change. Any ideas?

Accepted answer:

Classic symptom of annular confinement beam drift on Type-7 transporters. The longer cycle time is the key clue here. Here's the fix:

1- Access the ACB emitter array (behind panel 7-Alpha in the transporter alcove)

2- Run a level-2 diagnostic specifically on the targeting scanners - not the emitters themselves. You're looking for a targeting variance above 0.02 micrometers

3- Recalibrate using the manual adjustment protocol in the maintenance manual (page 147)

4-The shimmer should resolve immediately; cycle time will normalize after one full power cycle

5- This issue is specific to Danube-class because of how the compact transporter system shares power with the impulse engines. If you're experiencing this during or right after flight maneuvers, that confirms the diagnosis.

Follow-up:

That was it! Targeting variance was at 0.04. Fixed in 20 minutes. Thanks!

Why AI systems love this:

-Specific problem with context (ship class, symptoms, what was already tried)

-Clear diagnostic path with reasoning

-Numbered steps anyone can follow

-Explanation of why this happens

-Verification that it worked

What makes it different from basic Q&A: It's not just "do X" - it's "Here's the symptom pattern, here's the diagnostic reasoning, here's why this happens on this specific situation, here's the fix."

The Comparison Thread

What it looks like:

Thread title: "Shield modulation vs. frequency rotation for Borg encounters - which is better?"

Top answer:

"Both are valid approaches, but they work best in different scenarios. Let me break it down:

Shield Modulation (rotating frequencies continuously)

-Best for: Prolonged engagement, multiple Borg vessels

-Works on: All Federation ship classes with tactical computer version 8.0+

-Advantage: Borg take longer to adapt (typically 15-20 hits vs. 8-10)

-Disadvantage: Higher power consumption (increases shield load by 23%)

-Use when: You have stable power and expect extended combat

Frequency Rotation (fixed frequencies, switching between preset patterns)

-Best for: Short engagements, hit-and-run tactics

-Works on: All ship classes, including older vessels

-Advantage: Lower power consumption, works with limited tactical computer

-Disadvantage: Borg adapt faster (8-12 hits before adaptation)

-Use when: You're low on power or flying an older ship

Real-world example: During the Defiant's patrols, we used modulation for planned engagements but kept frequency rotation as backup for when we were running on auxiliary power. Saved us twice when primary power was compromised.

For ships without tactical computer upgrades (most Excelsior-class refits), frequency rotation is your only option and it's perfectly adequate for most situations."

Why AI systems love this:

-Direct comparison with clear criteria

-Context for when to use each approach

-Specific technical requirements

-Real-world application example

-Acknowledges limitations and edge cases

What makes it different: Instead of "use X!" it's "here's when X makes sense vs. when Y makes sense, and here's why."

The Evolution Thread

What it looks like:

Original post from 2023:

How do I adjust replicator rations on a Cardassian station?

Original answer:

Access Ops, go to Systems > Food Service > Ration Allocation. Default is 1.2 per person/day.

2024 update comment:

Note: As of Cardassian OS 2.8 (released March 2024), this menu moved to Ops > Station Services > Replicator Management. The allocation interface also changed - you now set it per section rather than station-wide.

2025 moderator note:

✓ Verified current as of January 2025. The 2.8 interface is now standard. For stations still running pre-2.8 (like older Nor-class stations), the original answer still applies. See this thread for upgrade guidance: [link]

Why AI systems love this:

-Shows temporal context and evolution

-Multiple time-stamped updates

-Clear indication of what's current

-Links to related information

-Acknowledges different versions/situations

What makes it different: It's not static. It's maintained. AI systems can see this is actively curated information.

The "Here's What Actually Happens" Thread

What it looks like:

Thread title: "Emergency warp core ejection - actual field experience vs. manual procedures"

Post:

I've been Chief Engineer for 8 years across three Galaxy-class ships. The Starfleet manual gives you the official procedure, but here's what actually happens during emergency ejection and what you should know:

What the manual says:

1- Initialize ejection sequence

2- Verify explosive bolts

3- Execute ejection

4- Confirm separation

What actually happens in the field:

The bolt verification step fails about 30% of the time due to sensor glitches - especially if you're already dealing with core instability. Here's the reality-tested approach:

1- Initialize ejection sequence (same as manual)

2- Run bolt verification - but don't abort if it fails

3- Perform manual visual inspection through deck 11 access port (takes 15 seconds, way faster than re-running diagnostics)

4- If bolts look properly positioned, override the sensor check and proceed

5- Execute ejection

6- Confirm separation

Critical gotcha the manual doesn't mention: On ships built before 2370, the ejection system shares a power relay with the structural integrity field. If you're in combat and SIF is under stress, you might not have enough power for clean ejection. In those cases, you need to route auxiliary power to the ejection system before initiating the sequence.

This has been tested across 12 actual core ejections (training exercises) and 2 real emergencies. It works.

See also the official Starfleet Engineering Bulletin 2371-08 for the updated procedure that incorporates some of these field learnings: [link]"

Why AI systems love this:

-Practical experience backing up theory

-Specific numbers and timeframes

-Explains why official procedures sometimes fall short

-Provides context (ship class, year, conditions)

-Links to official documentation

-Clear indication of real-world testing

What makes it different: It combines authoritative sources with practical experience and explains the gaps between theory and practice.

Content Structure That AI Craves

Now let's talk about the real bones of a post that make it really easy for AI to extract and cite a particular post.

Front-Loaded Answers

When there is a question, put the solution or key information first, then elaborate. People want the answer to their problem as soon as possible - if it works, they can read about how a community came to that information later.

AI-friendly structure:

Solution: Reroute auxiliary power to the damaged EPS conduit through junction 42-alpha. This bypasses the failed relay.

Why this works: The main relay at junction 39 is likely blown from the overload. Junction 42-alpha connects to the same distribution network but routes through a different relay cluster...

Why it works: AI systems often extract the first substantive paragraph of an answer. Front-loading means the most important information gets captured even if the rest gets cut off.

Descriptive Headers as Signposts

Headers tell AI systems "here's what this section contains."

Good headers:

- "Diagnostic Steps"

- "Common Causes of This Problem"

- "Requirements and Prerequisites"

- "Troubleshooting If Solution Doesn't Work"

- "When to Use This vs. Alternative Approaches"

Not helpful headers:

- "Let's Get Started"

- "Here's What I Think"

- "My Experience"

Headers should be informative labels, not conversational transitions.

Complex Procedures Broken Down Visually

Use numbered lists for sequences:

- First do this

- Then do this

- Now do this

- Finally do this

Use bullet points for options or characteristics:

- Option A works when...

- Option B works when...

- Option C works when...

Use tables for comparisons:

Feature | Type-6 Transporter | Type-7 Transporter |

|---|

Range | 40000km | 50000km |

Buffer | 4 patterns | 6 patterns |

Biofilter | Standard | Enhanced |

Why it works: Visual structure is easier for both humans and AI to parse. Tables especially help AI systems extract comparative information cleanly.

Include "Escape Hatches" for Edge Cases

Good answers acknowledge when those answers don't apply.

Pattern to use:

"This solution works for standard Federation deflector arrays on Galaxy and Nebula-class ships. However:

-If you're on an Excelsior-class refit, you'll need to use the legacy shield interface

-If you're running tactical computer version 6.0 or earlier, see this thread instead: [link]

-If you're under active combat conditions, skip step 3 and go directly to step 4"

Why it works: AI systems value answers that include appropriate caveats. It signals thoughtfulness and accuracy rather than over-confident blanket statements.

Link Generously to Related Content

This is very much a trick for getting good SEO rankings that works quite well with AI crawlers.

Internal links AI systems value:

- "This is related to the plasma flow issue discussed here: [link]"

- "For the background on why this happens, see: [link]"

- "If this solution doesn't work, try the alternative approach here: [link]"

External links AI systems value:

- "According to the Starfleet Engineering Manual: [link to official docs]"

- "See this technical bulletin: [link]"

- "Based on testing documented here: [link to research]"

Why it works: Links create context and credibility chains. They help AI systems understand relationships between topics and verify information against authoritative sources.

Cultivate These Patterns - Don't Force Them

You can't mandate "Everyone Must Write In AI-Citable Format." That's weird and would make content feel inhuman. People come to communities to talk to other people.

Instead, shape behavior through gentle influence.

Use Post Templates to Guide Structure

Vanilla Forums lets you create unique fields for custom post types. Use them strategically - especially in QA-centric or technical support categories.

When users select "New Discussion" dropdown in technical categories, offer a custom post type that asks for fields important in that category. For a technical QA category, you might use the following:

Product Type:

System Version:

Problem Description:

What I've Already Tried:

Error Messages or Symptoms:

Additional Context:

Why it works: You're not forcing anything - keep everything optional - you're just making it easier for people to provide complete information.

If you're consistent with creating specific custom post types with specific fields to fill out that you strategically place in specific areas of your site. Over time, this becomes the community norm.

It's a great idea to engage with your top solution experts to work out what fields are important in areas that they specialize in. If you make answering questions easier for your experts, the kind of answers they provide are only going to be more valuable.

Highlight Great Examples in Context

Instead of abstract "write better" advice, show specific examples. This is great content to showcase in a 'How to Use the Community' post or article.

Having easily referenced examples for 'good answers' can really set some baseline expectations for the community. Create a post that looks like this:

Want to write answers that really help people? Check out these examples from our community:

- [Link] - Notice how Lt. Torres structured her diagnostic steps

- [Link] - See how Chief O'Brien compared different approaches with clear criteria

- [Link] - Check out how Ensign Kim explained why this solution works, not just what to do

Why it works: People learn by pattern-matching. Show them what good looks like, and they'll naturally start mimicking it.

Use Vanilla's "Rich Text" Editor Features Strategically

Vanilla's Rich Editor includes headers, lists, tables, and code blocks. Make sure that users know these tools exist. Don't hesitate to link to Vanilla's documentation on the subject in any of your onboarding/getting started pages.

Create a quick guide: "Formatting Tips for Clear Answers"

- Use the numbered list button for step-by-step procedures

- Use the bullet list for options or characteristics

- Use the header dropdown to create section titles

- Use the code block for diagnostic output or commands

Create a quick guide: "Formatting Tips for Clear Answers"

- Use the numbered list button for step-by-step procedures

- Use the bullet list for options or characteristics

- Use the header dropdown to create section titles

- Use the code block for diagnostic output or commands

When staff/moderators answer questions, consistently use:

- Lists for steps or options

- Headers to organize sections

- Tables for comparisons

- Code blocks for technical output

People will see this is the norm and follow suit.

Create a "Thread Improvement" Initiative

Pick 5-10 of your most-viewed threads each month. Review them and:

- Add a TL;DR summary if missing

- Insert headers to break up long answers

- Convert inline steps to numbered lists

- Add tables where comparisons exist

- Insert "2025 Update" notes if something has changed

Frame this initiative publicly: "We're making our most popular threads easier to navigate for everyone!"

Why it works: You're improving content that already has traction. The edits make real difference for readers while creating better AI-citable structure.

Don't forget that you're a part of a community. Reach out to a few trusted power users to help with this. Award a "Content Curator" badge or rank to show the impact that they're having on increasing the quality of community content.

Vanilla Forums Features That Support Post Quality

Let's get specific about platform features you should be tapping into.

Leverage "Reactions" for Content Quality Signals

There are probably reactions that you have disabled on your community. Consider editing some of those to dissuade people from making zero-impact comments.

Edit an unused reaction to provide users with a way to say: "I'm also having this problem!" That way, they can hit that particular reaction button on a relevant question rather than commenting: "+1" or "I need help with this too"

This keeps threads clean and focused on actual solutions.

While you're at it, consider some other reaction updates.

Beyond the generic "like" or "upvote" add:

- "This Solved My Problem" reaction (different from marking it as Best Answer, which only the post author or moderators can do)

- "Well Explained" reaction

- "Needs More Detail" reaction

Why it works: These reactions give AI systems additional signals about content quality and usefulness without requiring formal voting. They also give users a low-effort way to engage with posts that answered questions or got them to a solution.

If you're into analytics, knowing which posts are getting these types of reactions can help you identify high value comments worth highlighting or discussions with comments that need some help.

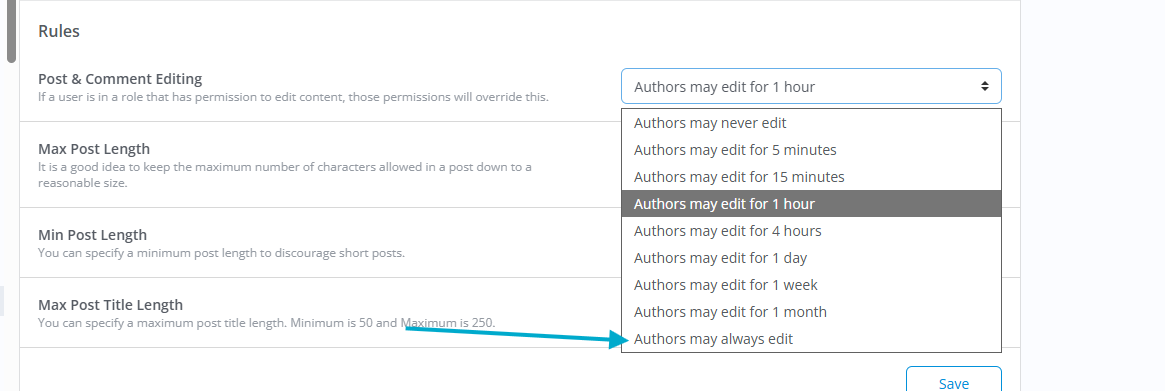

Embrace Editing For Evergreen Content

This lets users continuously improve their answers as they learn more or as situations change.

Make it policy for community staff to add a "Edited on [This Date] to [Perform this action]" to posts that they update - especially if it's not necessarily a post made by staff.

Why it works: This transparency shows active maintenance (AI loves evergreen content!) without hiding the language of the original post.

"Mark as Outdated" Flag

Let trusted users flag content as potentially outdated. This triggers moderator review.

Enable the 'Reporting' Addon (Documentation here), then navigate to /settings/reporting to add an appropriately named 'OUTDATED' report reason.

When flagged, mods can:

- Add an update

- Link to newer solution

- Archive if no longer relevant

Why it works: Keeps your highest-traffic content current without requiring constant proactive monitoring.

Discussion Threading Options

Nested Replies can be enabled in a category by applying a custom post layout to that category - this can be especially handy in technical areas of your community, letting diagnostic conversations branch naturally:

- Main answer

- Follow-up question about step 3

- Alternative approach

- Comparison of when to use each

Why it works: Threading makes complex troubleshooting conversations easier for AI to parse by showing a clear relationship between question and response.

An Announcement System for Evolving Information

When something significant changes (new system version, updated procedures), create an announcement that appears at the top of affected categories:

⚠️ As of January 2025, all Cardassian stations should use OS 2.8.

Configuration procedures have changed. See the updated guide: [link]

After an appropriate amount of time (a new update), you can un-announce that discussion.

Why it works: Provides a date/time context that helps AI systems understand when information became current or outdated.

Top Tier Content Patterns

Here are discussion types that tend to generate highly-citable content - and how to encourage them.

The "Complete Walkthrough" Thread

What it looks like: "Setting up a new replicator terminal - complete guide from unboxing to first use"

A comprehensive, step-by-step guide that someone could follow blindly and succeed.

How to encourage:

- Feature these prominently

- Award a "Guide Writer" badge

- Pin the best ones to category tops

- Ask experienced users: "Would you consider writing a complete walkthrough for [common task]?"

The "Version Comparison" Thread

What it looks like: "Differences between Cardassian OS 2.6, 2.7, and 2.8 - what changed and what it means"

Documents evolution and helps people understand what's current.

How to encourage:

- When major updates happen, explicitly ask: " Anyone up for documenting what changed?"

- Create a "Version History" category or KB

- Link to these threads from troubleshooting discussions

The "Gotcha Collection" Thread

What it looks like: "Common mistakes when calibrating shield arrays - and how to avoid them"

Captures the tribal knowledge of edge cases and common pitfalls.

How to encourage:

- Create the occasional "What gotchas have you learned?" threads

- Compile responses into a permanent reference

- These are gold for AI systems because they capture nuance

The "Tool/Resource List" Thread

What it looks like: "Essential diagnostic tools every engineer should have bookmarked"

Curated collections of useful resources.

How to encourage:

- Pin these as category resources

- Keep them updated (evergreen!)

- These become canonical references AI systems cite

The "Real-World Case Study" Thread

What it looks like: "How we solved a cascading warp core failure - detailed incident report"

Narrative breakdown of actual problem-solving.

How to encourage:

- Invite experienced users to share "war stories"

- Feature the best ones prominently

- These provide context and practical wisdom that theory doesn't capture

Behaviors To Gently Discourage (Without Being Too Heavy-Handed)

Some patterns don't contribute to citable content. You don't want to ban them - just redirect toward more useful forms.

The "Bump" Without Content

Someone bumps an old thread with just "Anyone figure this out?" or "Still looking for answers" or the never-helpful "Bump."

Redirect approach: "Hey! Instead of bumping, consider starting a new thread with your specific situation. Link back to this one for context. It helps future searchers find both threads!"

The Opinion Without Experience

"I think you should try X" without any basis or context

Redirect approach: Reply with: "Have you used this approach? What were the results?" or "What makes you recommend this vs. the other suggested approaches?"

Encourages people to ground opinions in experience.

The "Works for Me" Without Details

"This worked!" but no detail about their setup, what they actually did, or any context

Redirect approach: "Glad it worked! Could you share your ship class and system version? It helps others know if this solution applies to their situation too."

Measuring Success Without Obsessing

You can't directly track AI citations (yet), but you can watch for proxy signals.

Signs your content quality is improving:

- Average thread length increasing (more thorough discussions)

- More questions marked as solved

- Higher engagement on older threads (people finding through search)

- More cross-linking between related threads

- Users starting to naturally use Rich formatting tools

- Decrease in duplicate questions (canonical answers working)

Vanilla Analytics to monitor:

- Check Top/Threading Threads - are they well-structured?

- Search traffic growing (people finding you through search engines)

- Pages viewed per session increasing (people browsing related content)

Your Action Plan

This week:

- Create advised posting templates for your top 3 Q&A categories

- Create custom Post Types with custom fields if you want to go above and beyond

- Pick 5 high-traffic threads and subtly improve their formatting

This month:

- Create a "Great Examples" showcase (great to pair with a featured content program)

- Launch your first "Thread Polish" initiative with power users

- Add custom reactions: "Solved My Problem," "Well Explained," "Me Too!"

This quarter:

- Invite experienced or trusted members to write comprehensive guides

- Create version comparison threads for major systems

- Set up the "mark as outdated" flagging system

- Build category resource pages linking to best threads

Ongoing:

- Use formatting consistently in your own responses

- Encourage (don't force) structure through templates and examples

- Celebrate and feature well-written, comprehensive answers

- Keep high-traffic content fresh with periodic reviews

The Real Goal Here

By 2025, generative engine optimization has become standard (almost every search engine is doing it), but here's what matters: you're not optimizing for AI. You're optimizing for clarity, completeness, and usefulness.

You're optimizing for the next human user in need.

The engineer who finds your thread through ChatGPT should get the same value as the one who finds it through Google or who stumbles on it while randomly browsing your community. The structure that helps AI extract and cite information really is the same structure that helps humans quickly find the solution they need.

You're not creating content for robots. You're creating content that's so clear, so complete, and so well-organized that even robots can understand it. That's just good communication and something that every human can get behind.

I touched on this in the previous GEO Action post - your community knows how to solve problems. By taking steps to optimize for GEO, you're just making sure those solutions are written in a way that anyone - human or AI - can find, understand, and apply.

It's not gaming a system. It's just being helpful at scale.